When a large language model generates text one token at a time, it takes the original prompt and all the tokens generated so far as the input.

For this style of text generation the original prompt acts as the guide (as it is the only ‘constant’ being repeated at the start of every input). Each new token added interacts with the original prompt and the tokens added before it to decide future tokens.

Therefore, each added token has to be selected carefully if we are to generate useful output. That said, each token that is added does not hold the same weight in deciding future tokens. There are tokens that do not commit us to a particular approach in solving the problem and there are those that do. The ones that do are called ‘choice points‘.

For example, if we are solving a mathematical problem the numbers and operators we use are the choice points. If we use the wrong operator (e.g., add instead of subtract) or the wrong digits then the output will not be fit for purpose. Compare this to any additional text we add to the calculation to explain the logic. As long as the correct choice points are used there is flexibility in how we structure the text.

Framing the Problem

Given the prompt has ‘p’ tokens.

Then to generate the first token: xp+1 = Sample(P(x1, x2, … , xp))

Then to generate the second token: Xp+2 = Sample(P(x1, x2, … , xp, xp+1))

And then to generate the ith token where i>0, Xp+i+1 = Sample(P(x1, x2, … , xp, Xp+i))

P represents the LLM that generates the probability distribution over the fixed vocabulary for the next token.

Sample represents the sampling function that samples from the probability distribution to select the next token. In case we set disable sampling then we get greedy sampling (highest scoring token).

Now the scores that we obtain from P are dependent on the initial prompt and subsequent tokens. In certain cases the previous tokens will give clear direction to the model and in other cases the direction will not be clear.

Interpreting Model Behaviour

Clear direction is represented by the separation between the highest and second highest scores. More the gap, clearer is the signal generated by the model. This represents important tokens that do not have alternatives.

On the other hand if the separation is not large then we can say the signal is weak. There could be several reasons for the signal to be weak – such as the model is not clear about what comes next given the tokens presented so far or there is genuine flexibility in the next token.

This separation is critical because if we use sampling (i.e., don’t lock down the response) we could get different flows of text in cases where the separation is weak whilst in cases of high separation – securing the tokens critical for a correct response.

An Example of a Choice Point

I am using Microsoft phi-3.5-mini-instruct running within my LLM Server harness. We are collecting the logits and scores at the generation of each token using a LogitsProcessor. We are not using sampling. We then identify the top two tokens, in terms of the model score, and compare the difference between them. Let us go through an example.

The prompt: What is 14+5*34?

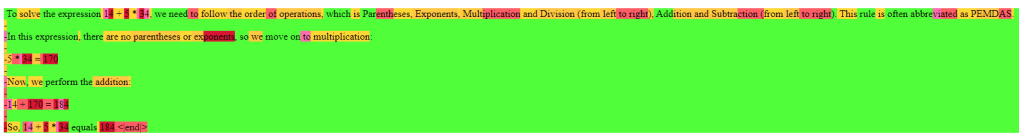

The response (colour coded):

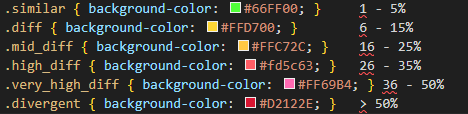

I am calculating the percentage difference between the highest and second highest scoring tokens. Then colour coding the generated tokens based on that difference using some simple CSS and HTML (output above).

We can see the green bits are fairly generic. For example: the tokens ‘In this expression’ is green which means the top two tokens were quite close to each other in terms of the score (2-4%). These tokens are redundant as if we remove these the text still makes sense.

The ‘,‘ after the expression has higher separation (10%) which makes sense given this is required given the tokens that precede it.

The real hight value tokens are those that represent the numbers and operators (e.g., ‘5 * 34 = 170’). Even there the choice points are quite clear. Once the model was given the token ‘5’ as part of the input the ‘*’ and ’34’ became critical for correct calculation. It could have taken another approach: start with ’34’ as the choice point and then ‘* 5’ become critical for the correct calculation.

Another Example

This time let us look at something subjective and not as clear cut as a mathematical calculation. Again we are not using sampling therefore we always pick the highest scoring token.

The prompt: What is the weather likely to be in December?

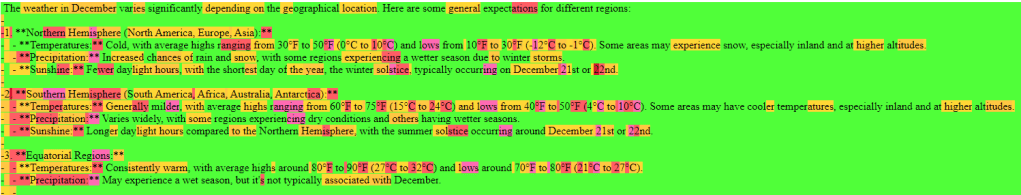

The response (colour coded):

The starting token ‘The’ is not that critical but once it is presented to the LLM the following tokens become clearer. The token ‘significantly’ is not that critical (as we can see from the low difference). It could be removed without changing the accuracy or quality of the output (in my opinion).

In the first major bullet once it had started with the token ‘Nor’ the following tokens (‘thern’, ‘ ‘, ‘Hem’, ‘is’, ‘phere’) become super critical. Interestingly, once it had received the second major bullet (‘**’ without the leading space) as an input it became critical to have ‘Sou’ as the next token as ‘Nor’ was already present.

The Impact

The key question that comes next is: why is this analysis interesting?

In my opinion this is an interesting analysis because of two reasons:

- It helps us evaluate the confidence level of the model very easily.

- It can help us with fine-tuning especially when we want to promote the use of business/domain specific terms over generic ones.

There is even more exciting things waiting when we start sampling. Sampling allows us to build a picture of the ‘optimum’ search space for the response. The LLM and Sampling interact with each other and drive the generation across the ‘high quality’ sequences within the optimum search space. I will attempt to make my second post about this.

Very Cool! Now I understand how AI detectors work.

LikeLike